Absolute Return Podcast Recap: Zapata AI CEO Answers Potential Investor Questions on Industrial Generative AI

Last week, Zapata AI CEO Christopher Savoie joined the Absolute Return Podcast, a podcast focused on stock market analysis and investment strategy. In light of the recent announcement that we will go public in a business combination with Andretti Acquisition Corporation, hosts Julian Klymochko and Michael Kesslering asked Christopher the burning questions on the mind of any investor interested in Zapata AI, such as

- What is generative AI?

- What is Industrial Generative AI?

- What are the applications for enterprises?

- What does Zapata AI bring to the table for these applications?

For answers to all these questions and more, you can listen to the podcast here, or read on for a recap of Christopher’s responses on the podcast.

What is generative AI, and what can it accomplish?

To provide some context, generative AI is a kind of machine learning. The basic idea is that you feed data into a machine and the machine learns the statistical patterns and interrelations in the data. Of course, humans can learn things too. But computers can learn trends and patterns in massive datasets that humans wouldn’t have the brainpower to process or identify.

But rather than doing things that would be impossible for humans, generative AI emulates human creative abilities that were once impossible for computers. You’ve probably seen generative AI tools like ChatGPT that can generate text that feels like a human wrote it, or AI art tools that can generate images that look like a human painted it. Rather than simply classifying data like traditional machine learning (is this painting a Van Gogh or a Monet?), generative AI can generate new data (here is a new painting in the style of Monet).

As Christopher put it, “The machine learning that we’ve had up until now really is rote memorization. You show it a thousand pictures of cats and it learns those thousand pictures of cats.” On the other hand, generative AI can say “I know a cat, so, and I know Picasso, so I can actually draw a Picasso cat, and we’ll recognize as a human that it’s a Picasso-ish cat, because it’s got a model for what is Picasso, and what is cat.”

That ability to generalize what a cat is or what a Picasso looks like is new. “Generalization means that you have this big statistical model of what a cat is. It’s not just that it has four legs and this and that. It can have a probability density of different tails, different tail sizes, different paw sizes, different colors, and all of this stuff.” Once you have a statistical model of a cat, you can create new images of cats that color outside the lines — like making a Picasso cat.

Of course, in a business setting it’s not just about pictures of cats. “We can use this technology to be creative in all kinds of human areas, like engineering a new bridge, engineering a new financial product, all these kind of things. And that’s what’s really surprising and powerful about this new generation of machine learning compared to what we’ve had before.”

Zapata AI spun out of Harvard in 2017. How did it get involved in Generative AI?

Zapata AI came out of a lab that did quantum algorithms. What are quantum algorithms? “Well, it’s a kind of complex math involving linear algebra to address high dimensional space problems,” said Christopher. “From the very beginning we were looking at places where we could apply this to industrial applications. So, where can we use linear algebra, this high dimensional, really difficult math to solve practical problems in industry and in business?”

“We realized very quickly that generative modeling, as in the statistical modeling of a cat’s tail or its paws, was very similar to the math we were using for chemistry and physics problems in the lab we were in.” Zapata AI filed its first patent for generative modeling in 2018, less than a year after it was founded. “So, we spun the technology out, did a lot of work on the IP, did a lot of development, and developed a platform that allows us to train and compare these models. And then we got engaged with a bunch of different companies in energy and other sectors that had these problems and wanted to apply these algorithms to their actual business problems.”

From a competitive perspective, how are you able to offer faster, cheaper, and more accurate generative AI?

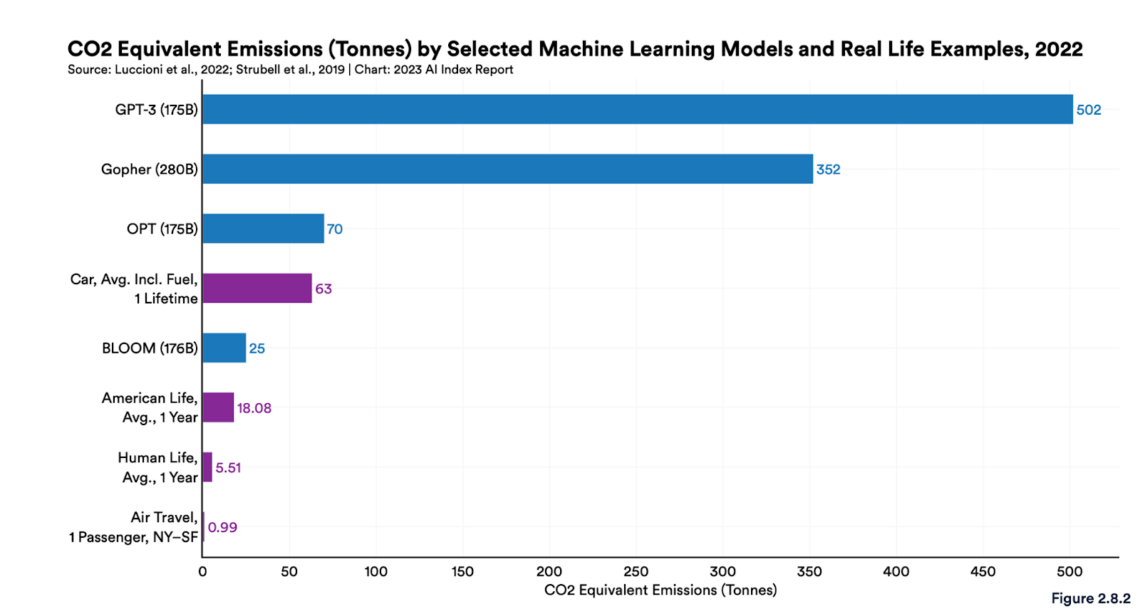

The GPT-4 model behind ChatGPT is great, and GPT-5 will eventually be even better. But these large language models are getting bigger by orders of magnitude each time — which is great for players like Jensen and Nvidia that make the GPUs required to run these LLMs. But GPUs are extremely expensive, and so the larger the model the more expensive it will be to run on these GPUs. They also consume a lot of electricity, so they’re not sustainable environmentally or financially from a business perspective.

Source: 2023 AI Index Report

So, what does Zapata AI do differently? “The simple trite, pithy answer would be math. But it’s a different kind of math that we’re using.” LLMs like GPT-4 use math based on neural networks, which work great until they start using an unsustainable amount of GPUs. “The math that we have coming out of the quantum physics world is designed to do that high-dimensional math more efficiently.”

“We deal with these complex statistical models all the time in quantum physics and quantum chemistry. In those cases, the models are immense in terms of the variables and the variable space. We’ve been doing that kind of high-dimensional math for decades in the physics world with a different kind of model, a different kind of math. And now we’re applying that math to machine learning with a completely new model that is actually quicker, faster, better. It’s these quantum algorithms that’ll eventually run on quantum hardware, but they can run actually on GPUs today much faster than neural networks can.”

In terms of practical applications of your technology, can you describe how you’re using generative AI to support the race strategy for your customer Andretti Autosport?

A lot of the strategy in race car driving comes down to when you take a pit stop. When you’re traveling over 200 miles an hour, the tires can wear down really quickly and get slower over time. You need to change the tires a few times in the race, but this takes precious time off the clock. So, when you do that — how you do that — is how you win or lose a race.

“To figure out when a tire is degrading or how it’s degrading or when that speed is falling off, you need really good physical models of this stuff.” One of the key variables is the slip angle of the tire. But despite having dozens of sensors on the car when it’s going around the track, you can’t measure slip angle directly. “You can’t put accelerometers on the outside of an open wheel racing car because if you rub the wall, it’s gone. They also throw off the balance. You can’t use GPS either because you’re going 200 miles an hour, and GPS is too slow. Cameras are not accurate enough.”

So, what do you do? “Well, we have 20 years of historical data of cars going around the track with all these hundreds or so sensors.” Using generative AI, we can train a model on this data to the point where we can infer what the slip angle is based on correlations with data we do have sensors for. “We’re able to use generative AI to very accurately generate an inferred sensor that we can feed into our tire degradation models and our race strategy models to figure out when we should pit the car, which is a win or lose decision oftentimes in the racing world.”

Zapata AI calls itself the Industrial Generative AI Company. How is Industrial Generative AI different from regular generative AI?

Industrial Generative AI takes generative models similar to those behind popular generative AI tools like ChatGPT and tailors them to problems specific to an enterprise, industry, or business function, with a focus on industrial problems.

Generally speaking, industrial problems typically share a few challenges in common. These are problems with a lot of uncertainty and unpredictability and vast numbers of possible solutions. These problems are often time sensitive and require high degrees of accuracy and reliability.

There’s usually limits on compute resources too. For example, for virtual sensor applications like the work with Andretti on the racetrack or in a mining operation deep underground, you won’t be able to run massive models that require dozens of GPUs.

Industrial problems often have intense data handling requirements, with sensors and other data sources housed in multiple places that needs to be cleaned and processed before it can be fed into the generative model. There’s also often security concerns when sensitive, private data is involved. All of these concerns are key considerations for us as we develop and deliver our Industrial Generative AI solutions.

From an investor’s perspective, you look at NVIDIA’s share price and clearly they’re going to make a lot of money with all the GPUs being acquired and snapped up. OpenAI also has a multi-billion-dollar valuation with its generative AI offering for enterprises. With respect to Zapata AI, what is the business model and how will the technology translate to profits for investors?

The tools provided by companies like OpenAI are clearly very powerful. “But the idea that you’re going to take a model that’s trained on the entire internet, trained on Ozzy Osborne lyrics and Aesop’s Fables and children’s novels, and apply that to filling in a customs form for a company is a little bit silly. You don’t need a model the size of GPT-4 for most enterprise use cases. Maybe you can use parts of that model, but it needs to be retrained.”

The quantum math we use at Zapata means we can make these LLMs smaller and more trainable, which makes it more cost effective when you want to retrain LLMs for problems specific to your business. “You can train your model on the data that you actually care about instead of the entire internet, which can lead to hallucinations where the model makes things up. That just isn’t acceptable when you’re trying to fill in an FDA application or dealing with people’s financial data.”

LLMs aside, we can also use generative AI for use cases like the virtual slip angle sensor with Andretti, where we’re dealing with numerical and time series data instead of language data. There are similar use cases in other industrial settings like manufacturing and heavy machinery, but also in finance and insurance where you want to generate data for hypothetical scenarios. “There are a lot of companies now looking into how do we use not just the language models, but these numerical models to do really interesting, important things in data analysis inside companies. I think it’s not even a question that people are going to invest in and spend money on new data analysis techniques in this world of data-driven decision making.”