What is Industrial Generative AI?

What is Industrial Generative AI?

Here’s how we define Industrial Generative AI:

Industrial Generative AI is a category of enterprise software that tailors generative AI to domain-specific, computationally complex problems found across industries.

We refer to these computationally complex problems as industrial problems They are found in every industry, not just those typically considered “industrial,” and are defined by seven common challenges: data disarray, large solution spaces, unpredictability, time sensitivity, compute constraints, a demand for accuracy and reliability, and security needs.

Let’s break the definition of Industrial Generative AI into its key components:

Industrial Generative AI is a subcategory of generative AI, which refers to AI technologies that can generate new, realistic data — whether that’s text, images, code, time-series data or other outputs. Generative AI can be used in various industries and settings to automate tasks, improve analytics, and generate valuable content, depending on the output generated.

At the heart of generative AI are generative models, which are machine learning models that learn the patterns in their training data to create new data that follows those patterns. The most well-known generative models are Large Language Models (LLMs), which are used to generate human-like text and can be found in popular tools like ChatGPT.

The focus on domain-specific problems within enterprises is what sets Industrial Generative AI apart from the more popular consumer-oriented forms of generative AI, like ChatGPT and DALL-E from OpenAI, for example. Whereas publicly accessible generative AI tools are designed to be general-purpose, Industrial Generative AI is custom fit to problems specific to an enterprise or industry.

Whereas publicly accessible generative AI tools are designed to be general-purpose, Industrial Generative AI is custom fit to problems specific to an enterprise or industry.

These general-purpose tools may have some use in certain industrial or enterprise settings, but generally they are better used as foundations that can be fine-tuned to the nuances of an enterprise problem. However, these publicly available foundation models are typically extremely heavyweight, requiring hundreds if not thousands of expensive GPUs (which are in short supply), making them unnecessarily large, expensive and impractical for most enterprise use cases.

In contrast, Industrial Generative AI tailors generative models to the unique data and infrastructure found within an enterprise. In addition to fine-tuning foundation models, Industrial Generative AI leverages lightweight, smaller or compressed models that are optimized to meet performance needs at the lowest cost. These lightweight models, in the case of LLMs, can be set up to interact with other software systems, such as database endpoints, embedding these technologies within the enterprise architecture in a compatible and effective way.

It’s also important to note that Industrial Generative AI goes beyond the text and image generation that has been the poster child for generative AI. In fact, the most valuable use cases may be in supporting business analytics challenges requiring complex mathematical models that can be found across enterprises. This includes enriching datasets with synthetic data similar to existing data, simulating future scenarios, or generating optimized recommendations.

For more on how Industrial Generative AI can enrich business analytics, check out our recent blog post on this topic.

The most valuable use cases may be in supporting business analytics challenges requiring complex mathematical models that can be found across enterprises.

From our perspective, there are seven key challenges that define a typical industrial problem. These challenges make industrial problems difficult to solve with traditional computing-based solutions. Industrial Generative AI is thus specifically engineered to address these challenges and bring the transformative value of generative AI to industrial problems.

Companies of enterprise size, no matter the industry, invariably deal with massive quantities of data across their organization. This data is often incomplete, fragmented, dispersed in various locations, out-of-sync and/or noisy (in other words, where the meaningful signal in the data must be extracted from extraneous and irrelevant noise).

This disarray can be a problem when the data is needed to train a generative AI model, requiring significant data cleaning, processing, and streamlining. But it’s also a problem for any analytics use case, preventing this valuable data from feeding into analytics that can inform important business decisions.

Fortunately, generative AI can help fill in the gaps. Generative models trained to capture data distributions can be used to accurately fill in missing data or create data for hypothetical scenarios for which data currently doesn’t exist. These models can also help identify anomalies in the data, which is useful for fraud detection or quality control, for example.

Industrial-scale problems typically have many variables, resulting in a very large number of possible solutions that business leaders must choose from. This is particularly true for optimization problems, where business leaders are looking for the most efficient and/or cost-effective way to run some part of their business. Generally for the same type of optimization problems, the larger the solution space, the more an optimization algorithm will struggle to find the best solution.

As it turns out, large solution spaces are also a hallmark of problems where quantum computers are expected to deliver an advantage, as they can theoretically explore these high-dimensional spaces much faster than a classical computer. In the absence of fault tolerant quantum computers, however, techniques originally used by quantum physicists and quantum information scientists to explore high-dimensional spaces on classical computers can provide an advantage for these optimization problems — while running on classical computers. For a deeper dive on how quantum science enhances generative AI, check out our blog post on this topic.

In our work with BMW and MIT, we used generative AI models built using these quantum techniques to propose new solutions to help BMW optimize their manufacturing plant scheduling. One of the key findings was that the quantum techniques for generative AI outperformed state-of-the art optimization algorithms the most when the solution space was the largest. You can learn more about Industrial Generative AI for optimization and the BMW work here.

Quantum techniques for generative AI outperformed state-of-the art optimization algorithms the most when the solution space was the largest

The larger the enterprise and the larger the problem, the more uncertainty is typically in the equation. It is difficult to predict, for example, how unforeseen interest rate changes will affect the risk of an investment or demand for different products. This unpredictability inevitably reduces the reliability of business analytics leaders depend on to make key decisions.

No matter how much data one has, it is always finite. We need something intelligent to extend our understanding into the unknown and extrapolate data for new or hypothetical scenarios. Fortunately, generative models that leverage unique mathematics based on quantum science, as discussed in the previous section, have also demonstrated novel advantages for generating high-quality, unseen data.

Our research found that these quantum techniques showed up to a 68x enhancement in generating unseen and valid data compared to a conventional generative model. This could provide a valuable advantage for simulating uncertain scenarios and yielding better solutions faster.

No matter how much data one has, it is always finite. We need something intelligent to extend our understanding into the unknown and extrapolate data for new or hypothetical scenarios.

Industrial problems often require real-time answers and cannot afford delays, whether in international shipping and logistics or in algorithmic trading and real-time risk management. Thus, an industrial problem is partly defined by the constraints on how quickly a solution must be calculated.

Different mathematical approaches span a range between finding the best possible solution eventually and finding a good-enough solution quickly. Knowing which approach will be the best fit for the time constraints of the problem requires dedicated expertise and benchmark testing.

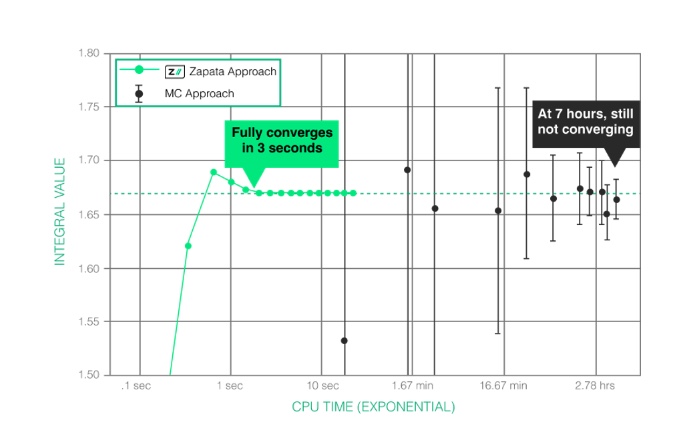

Quantum techniques, like those used for optimization, can also speed up computationally intensive and time-consuming calculations. Consider Monte Carlo Simulations, often used in the financial services industry to price derivatives, assess the risk of investments, and run stress tests. These simulations can take hours to run using traditional methods.

By using quantum techniques, we demonstrated a 1,000-10,000x speedup, arriving at a more accurate solution in three seconds than a typical Monte Carlo simulation would arrive at after seven hours. This type of speedup could provide a huge advantage when it comes to beating the market with a new investment, for example.

Industrial problems are also partly defined by limits on the computational power available to calculate solutions. For example, on edge devices, like remote machinery for mining, construction, or manufacturing, there won’t be sufficient space or power to run large machine learning models. Or, as in financial services, the volume of data and complexity of the calculations necessary to meet regulatory requirements can grow over time to exceed the limits of a firm’s existing compute infrastructure.

We’ve found that quantum techniques can also help to compress large computational models, such as the Large Language Models (LLMs) that power AI chatbots like ChatGPT, with the potential to reduce compute costs and unlock new use cases on the edge.

As we discussed in a previous post, we believe we have set the record for the largest language model to be compressed using quantum techniques (GPT2-XL), and we are working to compress larger models with the same techniques. These compressed models have fewer parameters —meaning they require fewer compute resources — and have higher accuracy than uncompressed models of the same size.

When it comes to generative AI use cases, inaccurate or implausible outputs may not be acceptable for industrial problems.

Industrial problems typically require high accuracy, precision, and reliability. Business leaders in these situations need the most accurate and reliable computational solutions given their compute budget and time constraints. When it comes to generative AI use cases, inaccurate or implausible outputs may not be acceptable for industrial problems.

Therefore, when building Industrial Generative AI applications, it’s important to benchmark many candidate solutions before selecting the final solution. Mission critical measurements can be included in this benchmarking process and may be balanced with compute and time constraints.

We see these mission-critical requirements firsthand in our work with Andretti Autosport. When races are often decided by milliseconds, precision counts. To support the team’s race strategy, we used generative AI to generate real-time data for a key race variable (slip angle) that would be impossible to measure with physical sensors. In testing this “virtual sensor” data, we found it was over 99% accurate compared to what the real data would be. Check out our case study on the work we’re doing with the Andretti team.

Models are perhaps more valuable to malicious actors than the data itself, as they distill and provide easy access to the key information in the data.

Global enterprises in regulated industries or industries that handle sensitive customer data have high cybersecurity standards. This can pose a risk when using generative AI, particularly LLMs. There are three layers of cybersecurity risks when using generative AI: the risk of sharing sensitive data with an external LLM provider; the security of the generative model itself; and unauthorized access to sensitive data via an LLM that was trained on this data.

Enterprises in regulated industries like healthcare or finance have strict policies around sharing sensitive data with third parties, and LLM providers should be no exception. Enterprise cybersecurity leaders should be careful that these external LLM providers are not training their public models with the sensitive data shared with their services. This risk can be avoided altogether by training and running generative models in the enterprise’s secure environment, whether on prem or on the cloud.

However, once trained, these models are perhaps more valuable to malicious actors than the data itself, as they distill and provide easy access to the key information in the data. Thus, any measures taken to protect enterprise data should also be applied to protect the models trained on this data. It’s also important to control access to LLMs trained on sensitive data so that unauthorized users don’t access their sensitive data.

Below are just a few examples of industrial problems that Industrial Generative AI can address:

Data Augmentation: Generating additional training data to improve machine learning models for various applications, like image recognition and natural language processing.

Prototyping and Product Design: Creating early-stage prototypes, product designs, and models to speed up the product development process.

Anomaly Detection: Generating synthetic data and enhanced models for training models to detect anomalies or outliers in various datasets, aiding fraud detection or quality control.

Content Inquiry: Creating a natural language-based interface for searching, retrieving and gaining insights on unstructured datasets.

Scenario Simulation: Creating simulated scenarios for training, testing, or strategizing in fields such as finance, cybersecurity, motorsports, defense and disaster planning.

Automated Report Generation: Creating reports, summaries, and insights from raw data, making it easier to analyze and present findings.