How to Defend Against Quantum Adversarial AI

Imagine a government building holding some of the country’s most important national security secrets. It’s protected by a facial recognition system that only allows authorized personnel to enter. One day, a spy working for an adversary is able to enter the building and steal government secrets simply by wearing glasses. How?

As it turns out, the glasses are printed with a pattern specifically designed to trick the facial recognition algorithm into thinking the spy is somebody else — somebody with authorized access. This may seem like something straight out of a Tom Clancy novel, but it is in fact a documented example of a possible “adversarial AI” attack. In another example, infrared lights placed under the brim of a hat light up the face in way that deceives a facial recognition system while remaining imperceptible to humans.

Adversarial AI attacks like these use data specifically designed to fool machine learning models and cause them to malfunction. These types of attacks can target models while they are being trained, or they can be used to deceive already-trained models, like in the facial recognition example above.

As governments, enterprises, and society at large become increasingly reliant on neural networks and other machine learning models, adversarial AI attacks are a growing threat to the reliability of these models. Neglecting these threats may have grave consequences.

In addition to the facial recognition example mentioned above, adversarial AI attacks could bypass malware and spam detection algorithms and trick airport security scanners to classify dangerous objects as safe. Carefully placed stickers can convince a self-driving car to drive on the wrong side of the road or interpret a stop sign as a speed limit sign. Fraud detection algorithms could be tricked to miss actual fraud, and medical image classification algorithms could be tricked to classify a tumor as benign.

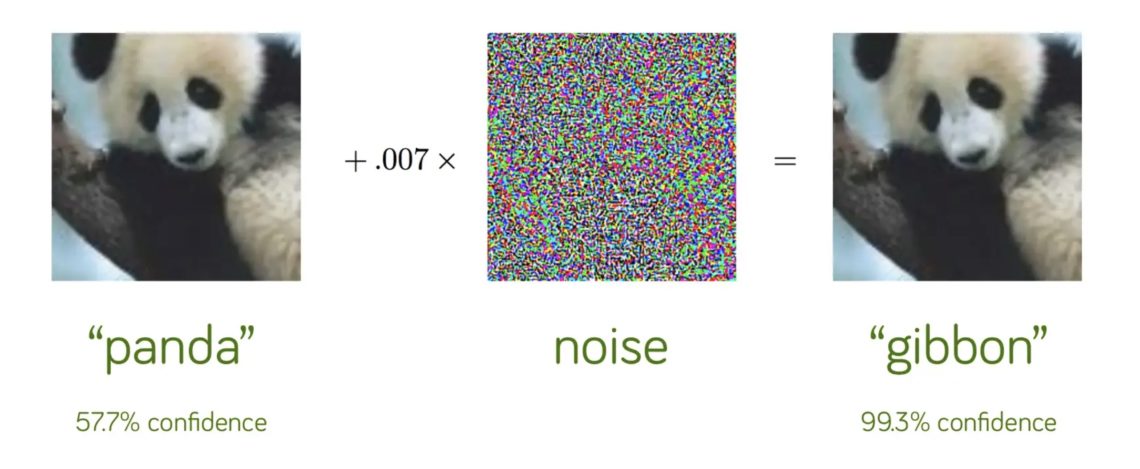

Source: Explaining and Harnessing Adversarial Examples, Goodfellow et al, ICLR 2015.

The deceptive data is hard to spot because it often looks identical to the “real” data to a human observer. In the example above, an image that an image recognition algorithm correctly labeled as a panda is modified with an intentional distortion (the “noise” image between the two pandas) to trick the algorithm into labeling it as a gibbon. Can you spot the differences?

Defending Against Adversarial AI

Recently, efforts have been made to make classifiers and other AI systems more resilient against these adversarial attacks. One example is Defense-GAN, which uses a machine learning technique known as a generative adversarial network (GAN) to generate adversarial data, which can then be used to train the classifier to defend it against other adversarial data. Like any good defense, it works by training against the offense it expects to face.

To go back to the facial recognition example from earlier, a facial classifier trained with Defense-GAN would be able to correctly tell the spy with the fancy glasses apart from somebody with authorized access. But what if the spy wore glasses that the facial recognition system wasn’t trained on?

Enter quantum computing.

Quantum has the potential to be a potent new adversarial weapon due to its ability to generate adversarial data that would be impossible to generate using classical computers. A purely classical defense like Defense-GAN would thus be unable to train the classifier to be resilient against quantum-generated adversarial data.

On the flipside, if one had access to a quantum computer, they could train their classifiers to defend against quantum-adversarial attacks. Along these lines, Zapata was recently granted a patent for a Quantum-Assisted Defense Against Adversarial AI (QDAI) algorithm — the first commercial algorithm for defense against quantum adversarial attacks.

How Quantum Adversarial Defense Works

Our QDAI algorithm works very similarly to the QC-AAN (Quantum Circuit Associative Adversarial Network) framework we used to generate images of handwritten digits, work that was recently published in Physical Review X and which we discussed in a blog post earlier this summer.

The QC-AAN framework is similar to a classical GAN. For the less AI-savvy readers, a GAN is a generative model consisting of two neural networks: a generator and discriminator. The generator learns from real data (for example, images) and tries to create synthetic data that resembles the data it was trained on. The discriminator can be thought of as a simple classifier: it classifies whether the data presented to it by the generator is real or fake. The feedback from the discriminator gradually trains the generator to create data that the discriminator is unable to tell apart from the real data.

At a high level, the difference between a GAN and a QC-AAN is that in a QC-AAN, the latent space in the discriminator (which provides a condensed, compressed description of the input data that approximately captures the data in fewer variables) is connected to the prior distribution in the generator (the random component in the generator that drives it to create unique data). This way, the latent space can provide the generator with samples that resemble the discriminator’s understanding of the data.

The “QC” part refers to the fact that the prior distribution is modeled by a quantum circuit, which is re-parameterized by the input from the latent space, speeding up and improving the training of the model. The advantage of using a quantum circuit here is that quantum circuits can encode probability distributions influenced by the latent space that would be difficult to express classically, and then can sample from these probability distributions more efficiently than a classical computer.

The QDAI framework is a lot like the QC-AAN framework, except the discriminator is replaced by a more general classifier. This classifier could, for example, classify pictures of animals as dogs, cats, rabbits, pandas, gibbons, etc. Instead of trying to generate pictures of, say, dogs that the classifier would classify as dogs, the generator would generate pictures of dogs and add adversarial noise to try to trick the classifier into classifying the images as cats. In the facial recognition example from earlier, it would try and augment a picture of the spy so the classifier thinks it’s somebody with authorized access.

Much like in the QC-AAN, the generator learns from the output of the classifier and tweaks its parameters until it can successfully generate adversarial images that fool the classifier. These adversarial images are then used to train the classifier you want to defend, so it knows how to correctly classify these images. Alternatively, these quantum-boosted adversarial images could be used to fool your enemy’s AI, and they wouldn’t be able to defend against it unless they were also using QDAI.

Quantifying the Quantum Threat

QDAI leaves some lingering questions. From a government perspective, with national security on the line, the most important questions pertain to quantifying the quantum adversarial threat:

- How effectively can quantum-boosted attacks deceive AI?

- How difficult is it to defend against quantum attacks?

- How advanced does a quantum computer need to be to pose a threat that would be hard to negate with classical techniques?

- Will future advances in quantum technology make these attacks more potent?

Answering these questions should be a high priority for governments looking to maintain dominance in AI, as investments in quantum technology need to be made years in advance.

Zapata is uniquely suited to help quantify this threat, not only because we developed QDAI, but also because our Orquestra® platform is ideal for comparing how quantum applications perform on different hardware backends and running large-scale simulations of quantum algorithms.

For this reason, DARPA awarded us funding earlier this year to build application-specific benchmark tests to compare the performance of different quantum hardware backends. These tests will help us estimate the resources — both quantum and classical — needed for an application to achieve a benchmark performance level using different hardware setups.

In the same way, Orquestra can be used to benchmark the performance of QDAI on different hardware backends. This would give governments and other organizations that rely on AI a clearer picture of how disruptive quantum adversarial attacks could be — and when.