How Quantum Science Enhances Generative AI

How Quantum Science Enhances Generative AI

For those new to Zapata AI (welcome!), you probably know us best for our Industrial Generative AI solutions. For those that have been following us since our founding, you may know us best for our quantum algorithms for enterprise applications. These two fields have been closely connected at Zapata since the beginning.

Quantum research is what has set our generative AI solutions apart.

Zapata has a long history of quantum innovation. In 2017, we spun out of a quantum computing lab at Harvard. One of our founders, Alán Aspuru-Guzik discovered the first quantum chemistry application for quantum computers. Zapata has continued this legacy, inventing new quantum algorithms for chemistry, finance, and other areas.

A key realization from the early days of the company has been that generative AI will likely be one of the first places we see a near-term quantum advantage. We’ve since built up an extensive track record of work in quantum generative AI.

In 2019, we filed our first patent for quantum techniques for generative AI. Later, in 2020 we were the first to generate high-quality data using a quantum-enhanced generative model, and then innovated the use of quantum generative AI to boost optimization solutions. More recently, we published research showing the potential advantages of quantum-enhanced generative models for drug discovery and also manufacturing plant optimization.

Today, we’ve broadened our mission to apply the insights we’ve learned from our research in quantum science and generative AI to solve complex computational problems faced by enterprises, including both language-based tasks and complex mathematical modeling tasks.

Our generative models can leverage the statistical advantages of quantum systems while running on classical computers.

This quantum research is what has set our generative AI solutions apart. But it doesn’t use actual quantum computers. Using mathematics from quantum science — including both quantum physics and quantum information science — our generative models can leverage the statistical advantages of quantum systems while running on classical computers.

Using quantum math for generative AI results in both higher quality and more diverse outputs.

Specifically, quantum statistics can enhance generative models’ ability to generalize — or extrapolate missing information and generate new, high-quality information. Generating genuinely new and high-quality data is very important for industrial use cases, particularly when the goal is to generate realistic new data or plausible scenarios.

Using quantum math for generative AI results in both higher quality and more diverse outputs.

Consider a model trained to generate new molecules for medical treatments. With poor generalization, the model would simply recreate the drugs that it was trained on, without understanding the rules for how these drugs work. A model with strong generalization would be able to learn these rules and generate new drug candidates that follow these rules.

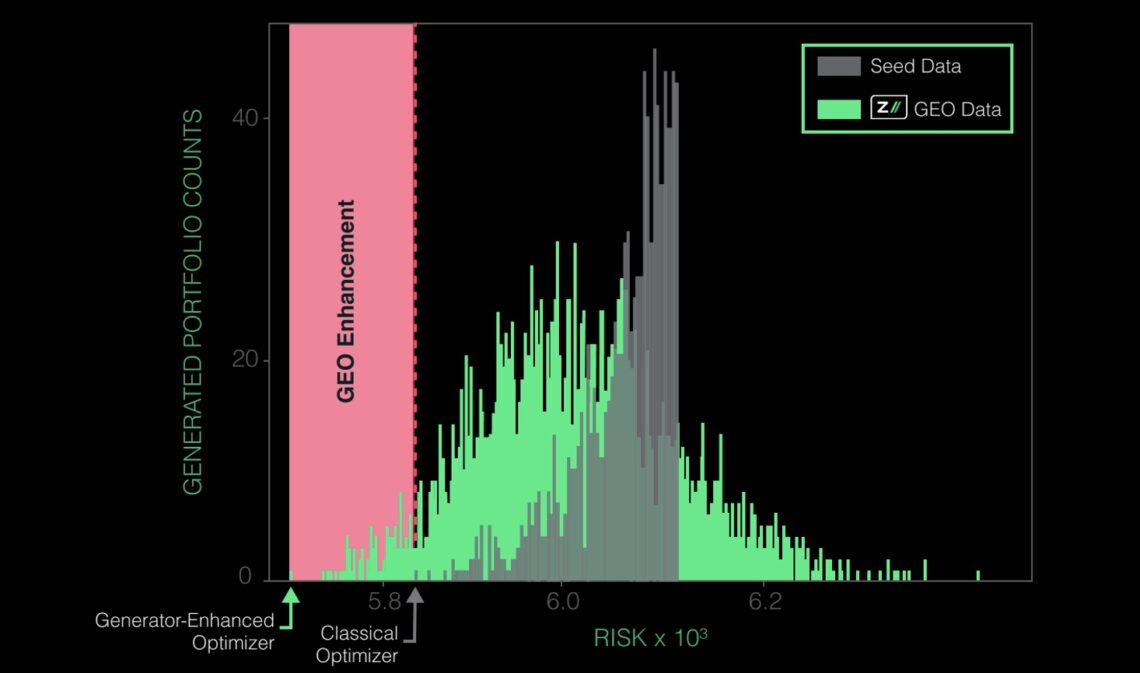

Quantum statistics can also be used to generate a wider range of solutions, a property known as “expressibility”. In the context of Industrial Generative AI applications, this can be used to generate more diverse synthetic datasets or generate new, previously unconsidered solutions to optimization problems. For example, our research found that quantum-enhanced generative models generated financial portfolios with lower risk (for the same return) than portfolios created by conventional optimization algorithms.

In the graph above, our generative model (GEO) generated lower-risk financial portfolios than the state-of-the-art optimizers it was trained on.

These quantum techniques can also be used to compress generative AI models, which can reduce their costs significantly.

Large language models (LLMs), like the GPT-4 model behind ChatGPT, and other large models are energy intensive and demand the processing power of specialized Graphics Processing Unit chips (GPUs). GPUs are expensive to run and use a lot of energy, leaving a large carbon footprint. They’re also harder to find as skyrocketing demand for generative AI has led to GPU shortages.

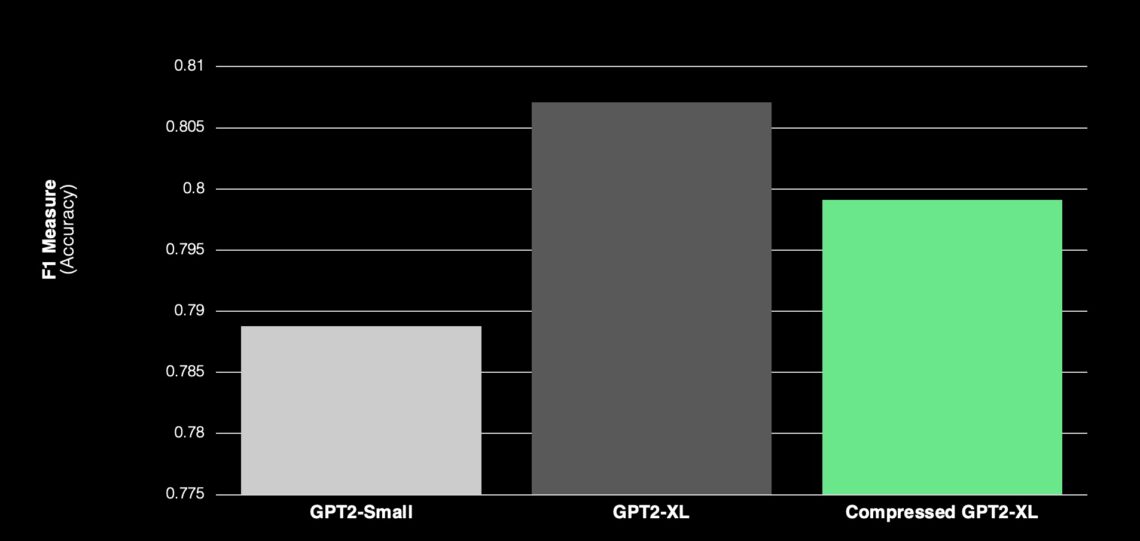

Our models compressed with quantum techniques use fewer parameters than their uncompressed counterparts, which means less energy consumption, lower compute costs and a smaller carbon footprint. We believe we have set the record for the largest language model to be compressed using quantum techniques (GPT2-XL), and we are working to compress larger models with the same techniques.

Not only do compressed models use fewer computational resources, but they have also proven to be more accurate than uncompressed models of the same size. When we compressed GPT2-XL to the size of GPT2, the compressed model showed higher accuracy than GPT2 despite using the same computational resources.

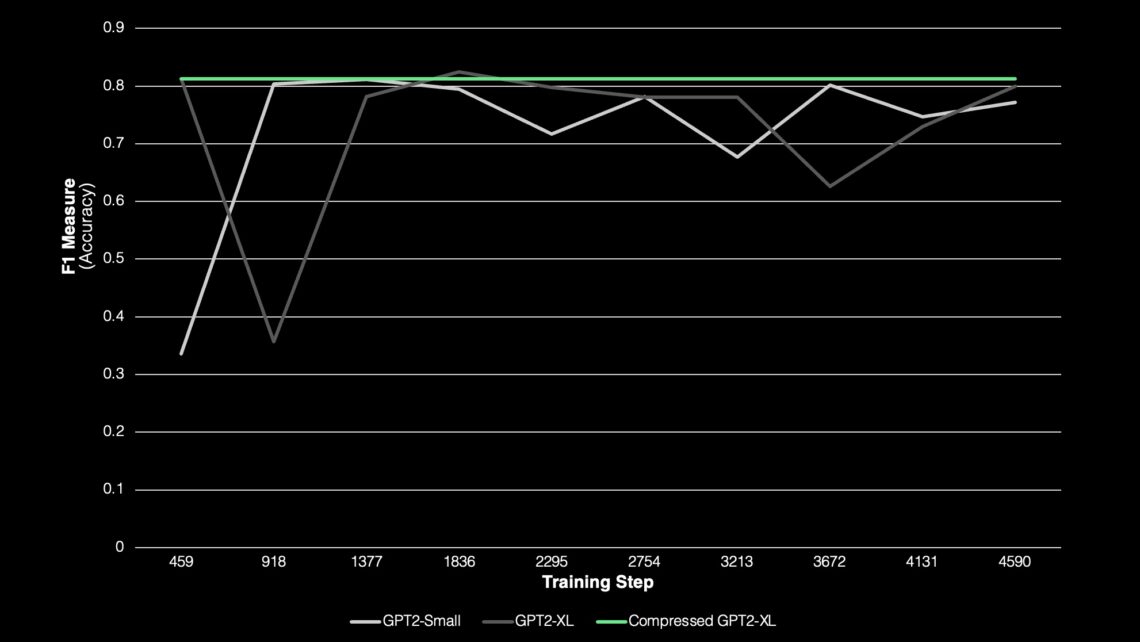

Models compressed with quantum techniques could also be trained at a lower cost. Our research found that the compressed models had more stable performance during training, meaning they achieved more consistent accuracy with fewer training steps compared to the uncompressed models. Training the model faster would translate into lower compute costs and fewer tokens required to train the model.

This means compression could also unlock new use cases in scenarios where there’s not a lot of data available to train the model. According to our research, compressed models can be trained to the same performance as their uncompressed counterparts using 300x less data. This could be particularly valuable for synthetic data and scenario simulation applications with limited precedent available to train the models.

In the meantime, these quantum techniques will build a bridge to the quantum future. The quantum models we use today on classical hardware will be forward compatible with real quantum circuits in the future — unlocking the additional advantages we anticipate will come with more powerful fault-tolerant quantum devices.

Our research will continue to close that gap between what’s possible today using quantum math on classical computers, and what’s possible on the quantum devices of the future. And along the way, our customers will be the first to leverage new breakthroughs in quantum techniques for generative AI — and eventually fault-tolerant quantum computers.